Task 2: in car#

This tutorial walks through the process of running the CAD1 Task 2 baseline using the shell interface.

1. Cloning the Clarity Repository#

We first need to install the Clarity package.

# Clean directory

from pathlib import Path

import shutil

dirpath = Path('clarity')

if dirpath.exists() and dirpath.is_dir():

shutil.rmtree(dirpath)

%%capture

!git clone --quiet https://github.com/claritychallenge/clarity.git

%cd clarity

!git checkout v0.3.3

%pip install -e .

%pip install seedir

%cd recipes/cad1/task2/baseline

2. Dataset#

We will be using music audio and listener metadata.

%%capture

import gdown

!gdown 1OWn0w39t_aeOOh514wRUgTw5uSwsoB17

!mv cadenza_data_demo.tar.xz recipes/cad1/task2/baseline

!tar -xvf cadenza_task2_data_demo.tar.xz

!rm cadenza_task2_data_demo.tar.xz

import seedir as sd

sd.seedir('cad1/task2/', style='lines', depthlimit=4, exclude_folders='ipy*', regex=True)

task2/

├─metadata/

│ ├─listeners.valid.json

│ ├─eBrird_BRIR.json

│ ├─scenes_listeners.json

│ ├─music.valid.json

│ └─scenes.json

└─audio/

├─eBrird/

│ ├─Anechoic/

│ │ ├─Anechoic.txt

│ │ └─audio/

│ └─Car/

│ └─audio/

└─music/

└─validation/

├─Instrumental/

├─Pop/

├─Rock/

├─Orchestral/

├─Classical/

└─International/

3. Baseline#

Note

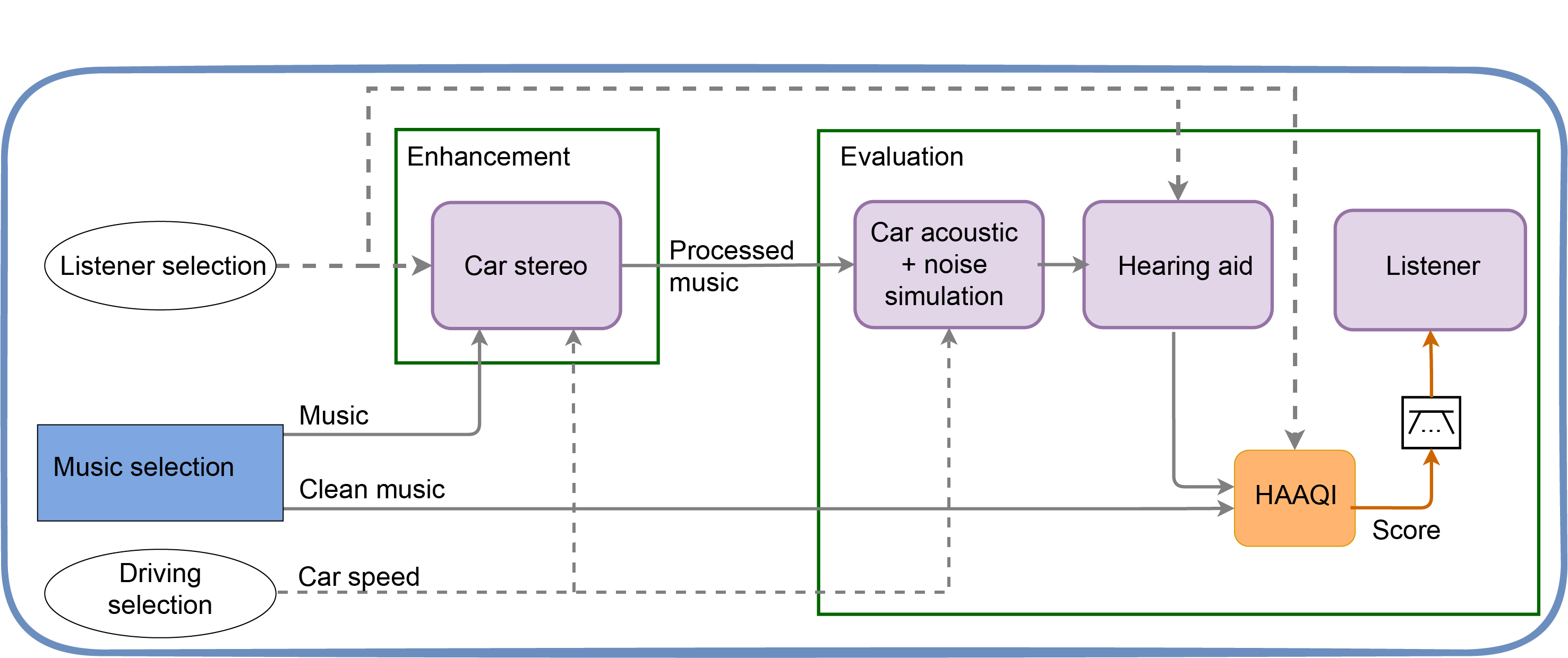

The baseline recipe is divided into 2 stages: Enhnacement and Evaluation

3.1 Enhancement#

The enhancement script contains several key functions

3.1.1 Enhance#

The main function that orchestrates the enhancement process.

It takes the config.yaml as input parameter

@hydra.main(config_path="", config_name="config")

def enhance(config: DictConfig) -> None:

"""

Run the music enhancement.

The baseline system is a dummy processor that returns the input signal.

Args:

config (dict): Dictionary of configuration options for enhancing music.

"""

3.1.2 Enhance Song#

A function that process a single song-listener pair

It adjusts the level of the song according to the average hearing loss. This is to prevent clipping.

def enhance_song(

waveform: np.ndarray,

listener_audiograms: dict,

config: DictConfig,

) -> tuple[np.ndarray, np.ndarray]:

"""

Enhance a single song for a listener.

Baseline enhancement returns the signal with a loudness

of -14 LUFS if the average hearing loss is below 50 dB HL,

and -11 LUFS otherwise.

Args:

waveform (np.ndarray): The waveform of the song.

listener_audiograms (dict): The audiograms of the listener.

config (dict): Dictionary of configuration options for enhancing music.

Returns:

out_left (np.ndarray): The enhanced left channel.

out_right (np.ndarray): The enhanced right channel.

"""

3.2 Evaluation#

The evaluation script assumes that the exp output directory exists. This is the output directory from enhancement.py script.

Caution

The evaluation script cannot be modified or altered in any way.

3.2.1 Run Calculate Audio Quality#

Main function that orchestrates the evaluation

@hydra.main(config_path="", config_name="config")

def run_calculate_audio_quality(config: DictConfig) -> None:

"""Evaluate the enhanced signals using the HAAQI metric."""

3.2.2 Evaluate Scene#

Evaluates a single scene

def evaluate_scene(

ref_signal: np.ndarray,

enh_signal: np.ndarray,

sample_rate: int,

scene_id: str,

current_scene: dict,

listener_audiogram: dict,

car_scene_acoustic: CarSceneAcoustics,

hrtf: dict,

config: DictConfig,

) -> tuple[float, float]:

"""Evaluate a single scene and return HAAQI scores for left and right ears

Args:

ref_signal (np.ndarray): A numpy array of shape (2, n_samples)

containing the reference signal.

enh_signal (np.ndarray): A numpy array of shape (2, n_samples)

containing the enhanced signal.

sample_rate (int): The sampling frequency of the reference and enhanced signals.

scene_id (str): A string identifier for the scene being evaluated.

current_scene (dict): A dictionary containing information about the scene being

evaluated, including the song ID, the listener ID, the car noise type, and

the split.

listener_audiogram (dict): A dictionary containing the listener's audiogram

data, including the center frequencies (cfs) and the hearing levels for both

ears (audiogram_levels_l and audiogram_levels_r).

car_scene_acoustic (CarSceneAcoustics): An instance of the CarSceneAcoustics

class, which is used to generate car noise and add binaural room impulse

responses (BRIRs) to the enhanced signal.

hrtf (dict): A dictionary containing the head-related transfer functions (HRTFs)

for the listener being evaluated. This includes the left and right HRTFs for

the car and the anechoic room.

config (DictConfig): A dictionary-like object containing various configuration

parameters for the evaluation. This includes the path to the enhanced signal

folder,the path to the music directory, and a flag indicating whether to set

a random seed.

Returns:

Tuple[float, float]: A tuple containing HAAQI scores for left and right ears.

"""

3.3 Car Scene Acoustics#

A class containing all the logic for the car acoustics

Noise Generation

Applies the head-related transfer function (HRTF) taking the head direction into account.

Applies the hearing aid (HA) amplification

class CarSceneAcoustics:

"""

A class for the car acoustic environment.

"""

def apply_car_acoustics_to_signal(

self,

enh_signal: np.ndarray,

scene: dict,

listener: dict,

hrtf: dict,

audio_manager: AudioManager,

config: DictConfig,

) -> np.ndarray:

"""Applies the car acoustics to the enhanced signal."""

def apply_hearing_aid(

self, signal: np.ndarray, audiogram: np.ndarray, center_frequencies: np.ndarray

) -> np.ndarray:

"""

Applies the hearing aid:

It consists in NALR prescription and Compressor

"""

def get_car_noise(

self,

car_noise_params: dict,

) -> np.ndarray:

"""Generates car noise."""

def add_hrtf_to_stereo_signal(

self, signal: np.ndarray, hrir: dict, hrtf_type: str

) -> np.ndarray:

"""Add a head rotation transfer function using binaural room impulse

response (BRIR)"""

def scale_signal_to_snr(

self,

signal: np.ndarray,

reference_signal: np.ndarray = None,

snr: float | None = 0,

) -> np.ndarray:

"""

Scales the target signal to the desired SNR.

We transpose channel because pylodnorm operates

on arrays with shape [n_samples, n_channels].

"""

3.4 Audio Manager#

A simple utility class to manage the signals to be saved.

This class stores and save intermediate signals in order to help to understand the effect of each step in the evaluation.

class AudioManager:

"""A utility class for managing audio files."""

def add_audios_to_save(self, file_name: str, waveform: np.ndarray) -> None:

"""Add a waveform to the list of audios to save."""

def save_audios(self) -> None:

"""Save the audios to the given path."""

def clip_audio(

self, signal: np.ndarray, min_val: float = -1, max_val: float = 1

) -> tuple[int, np.ndarray]:

"""Clip a WAV file to the given range."""

def get_lufs_level(self, signal: np.ndarray) -> float:

"""Get the LUFS level of the signal."""

def scale_to_lufs(self, signal: np.ndarray, target_lufs: float) -> np.ndarray:

"""Scale the signal to the given LUFS level."""

See also

The car noise generation code is also available for you to explore it.

clarity.utils.car_noise_simulator

class CarNoiseParametersGenerator:

"""

A class to generate noise parameters for a car.

The constructor takes a boolean flag to indicate whether some

parameters should be randomized or not.

The method `gen_parameters` takes a speed in kilometers per hour

and returns a dictionary of noise parameters.

"""

class CarNoiseSignalGenerator:

"""

A class to generate car noise signal.

The constructor takes the sample_rate and duration of

the generated signals.

The method `generate_car_noise` takes parameters for the

noise and generates the signal. These parameters

can be generated by the CarNoiseParameters class.

"""

4. Inspecting Existing Configuration#

All of the included shell scripts take configurable variables from the yaml files in the same directory as the shell script.Typically these are named config.yaml, however, other names may be used if more than one shell script is in a directory.

We can inspect the contents of the config file:

path:

root: ../../cadenza_task2_data_demo/cad1/task2

audio_dir: ${path.root}/audio

metadata_dir: ${path.root}/metadata

music_dir: ${path.audio_dir}/music

hrtf_dir: ${path.audio_dir}/eBrird

listeners_train_file: ${path.metadata_dir}/listeners.train.json

listeners_valid_file: ${path.metadata_dir}/listeners.valid.json

scenes_file: ${path.metadata_dir}/scenes.json

scenes_listeners_file: ${path.metadata_dir}/scenes_listeners.json

hrtf_file: ${path.metadata_dir}/eBrird_BRIR.json

exp_folder: ./exp # folder to store enhanced signals and final results

sample_rate: 44100 # sample rate of the input signal

enhanced_sample_rate: 32000 # sample rate for the enhanced output signal

nalr:

nfir: 220

fs: ${sample_rate}

compressor:

threshold: 0.7

attenuation: 0.1

attack: 5

release: 20

rms_buffer_size: 0.064

soft_clip: False

enhance:

average_level: -14 # Average level according Spotify's levels

min_level: -19

evaluate:

set_random_seed: True

small_test: False

save_intermediate_wavs: False

split: valid # train, valid

batch_size: 1 # Number of batches

batch: 0 # Batch number to evaluate

# hydra config

hydra:

run:

dir: ${path.exp_folder}

5. Run Demo#

Typically, all the work is done within python with configurable variables supplied by a yaml file which is parsed by Hydra inside the python code.

The execution of this code is performed in the CLI and new configuration variable values are supplied as arguments to override defaults.

We are now ready to run the prepared python script. However, the standard configuration is designed to work with the full clarity dataset. We can redirect the script to the correct folders by overriding the appropriate configuration parameters.

!python enhance.py path.root=../cad1/task2

0%| | 0/20 [00:00<?, ?it/s]

5%|██▏ | 1/20 [00:01<00:19, 1.01s/it]

10%|████▍ | 2/20 [00:01<00:09, 1.99it/s]

15%|██████▌ | 3/20 [00:01<00:05, 2.96it/s]

20%|████████▊ | 4/20 [00:01<00:04, 3.84it/s]

25%|███████████ | 5/20 [00:01<00:03, 4.53it/s]

30%|█████████████▏ | 6/20 [00:01<00:02, 5.17it/s]

35%|███████████████▍ | 7/20 [00:01<00:02, 5.59it/s]

40%|█████████████████▌ | 8/20 [00:02<00:02, 5.86it/s]

45%|███████████████████▊ | 9/20 [00:02<00:01, 6.16it/s]

50%|█████████████████████▌ | 10/20 [00:02<00:01, 6.38it/s]

55%|███████████████████████▋ | 11/20 [00:02<00:01, 6.54it/s]

60%|█████████████████████████▊ | 12/20 [00:02<00:01, 6.70it/s]

65%|███████████████████████████▉ | 13/20 [00:02<00:01, 6.80it/s]

70%|██████████████████████████████ | 14/20 [00:02<00:00, 6.84it/s]

75%|████████████████████████████████▎ | 15/20 [00:03<00:00, 6.73it/s]

80%|██████████████████████████████████▍ | 16/20 [00:03<00:00, 6.79it/s]

85%|████████████████████████████████████▌ | 17/20 [00:03<00:00, 6.75it/s]

90%|██████████████████████████████████████▋ | 18/20 [00:03<00:00, 6.35it/s]

95%|████████████████████████████████████████▊ | 19/20 [00:03<00:00, 6.40it/s]

100%|███████████████████████████████████████████| 20/20 [00:03<00:00, 6.56it/s]

100%|███████████████████████████████████████████| 20/20 [00:03<00:00, 5.24it/s]

Define some helper functions

%%capture

!pip install more_itertools

from os import listdir

from os.path import isfile, join

from pathlib import Path

from scipy.io import wavfile

from more_itertools import windowed

from clarity.clarity.utils.flac_encoder import read_flac_signal

import IPython.display as ipd

import pandas as pd

def audio_player_list(signals, rates, width=270, height=40, columns=None, column_align='center'):

"""Generate a list of HTML audio players tags for a given list of audio signals.

Notebook: B/B_PythonAudio.ipynb

Args:

signals (list): List of audio signals

rates (list): List of sample rates

width (int): Width of player (either number or list) (Default value = 270)

height (int): Height of player (either number or list) (Default value = 40)

columns (list): Column headings (Default value = None)

column_align (str): Left, center, right (Default value = 'center')

"""

pd.set_option('display.max_colwidth', None)

if isinstance(width, int):

width = [width] * len(signals)

if isinstance(height, int):

height = [height] * len(signals)

audio_list = []

for cur_x, cur_Fs, cur_width, cur_height in zip(signals, rates, width, height):

audio_html = ipd.Audio(data=cur_x.T, rate=cur_Fs)._repr_html_()

audio_html = audio_html.replace('\n', '').strip()

audio_html = audio_html.replace('<audio ', f'<audio style="width: {cur_width}px; height: {cur_height}px" ')

audio_list.append([audio_html])

df = pd.DataFrame(audio_list, index=columns).T

table_html = df.to_html(escape=False, index=False, header=bool(columns))

table_html = table_html.replace('<th>', f'<th style="text-align: {column_align}">')

ipd.display(ipd.HTML(table_html))

def load_and_display(audio_path, number_audios=None, start=20, end=30):

number_audios = -1 if number_audios is None else number_audios

audio_files = [f for f in audio_path.glob('**/*') if f.suffix in ['.flac', '.wav']][:number_audios]

signals = []

rates = []

columns = []

# load signals

for file_to_play in audio_files:

if file_to_play.suffix == '.flac':

signal, sample_rate = read_flac_signal(file_to_play)

else:

sample_rate, signal = wavfile.read(file_to_play)

signals.append(signal[int(start*sample_rate):int(end*sample_rate)])

rates.append(sample_rate)

columns.append("/".join(list(file_to_play.parts[-3:-1]) + [file_to_play.stem]))

# display signals

for s, r, c in zip(

windowed(signals, 2, step=2),

windowed(rates, 2, step=2),

windowed(columns, 2, step=2)):

audio_player_list(s, r, columns=c)

Now we have the enhanced output. Below, we can load and play the audio to listen to examples of the results.

load_and_display(Path("exp/enhanced_signals"), 2, start=20, end=30)

| valid/L5022/T133454_L5022_S2023_L5022_fma_133454 | valid/L5009/T142947_L5009_S2023_L5009_fma_064515 |

|---|---|

Now that we have enhanced audios we can use the evaluate recipe to generate HAAQI scores for the signals.

!python evaluate.py path.root=../cad1/task2 evaluate.small_test=True

[2023-05-22 14:28:07,157][__main__][INFO] - Evaluating from enhanced_signals directory

0%| | 0/2 [00:00<?, ?it/s]

[2023-05-22 14:29:11,265][__main__][INFO] - The combined score for scene T012526_L5076_S2023: 0.1489

50%|██████████████████████▌ | 1/2 [01:04<01:04, 64.10s/it]

[2023-05-22 14:29:20,979][recipes.cad1.task2.baseline.car_scene_acoustics][WARNING] - Scene T131979_L5007_S2023S500015: 10 samples clipped in evaluation signal.

[2023-05-22 14:30:14,952][__main__][INFO] - The combined score for scene T131979_L5007_S2023: 0.1092

100%|█████████████████████████████████████████████| 2/2 [02:07<00:00, 63.86s/it]

100%|█████████████████████████████████████████████| 2/2 [02:07<00:00, 63.89s/it]

load_and_display(Path("exp/evaluation_signals"), 2, start=20, end=30)

| L5007/fma_131979/ref_signal_for_eval | L5007/fma_131979/ha_processed_signal |

|---|---|

We hope that this tutorial has been useful and has explained the process for using the recipe scripts using the Hydra configuration system. This approach can be applied to all of the recipes that are included in the repository.